Visual Intelligence: The First Decade of Computer Art (1965-1975)

Author(s): Frank Dietrich

Source: Leonardo, Vol. 19, No. 2 (1986), pp. 159-169

Published by: The MIT Press

Stable URL: http://www.jstor.org/stable/1578284

Accessed: 27/08/2008 05:16

Abstract-The author traces developments in computer art worldwide from 1965, when the first computer art exhibitions were held by scientists, through succeeding periods in which artists collaborated with scientists to create computer programs for artistic purposes. The end of the first decade of computer art was marked by economic, technological and programming advances that allowed artists more direct access to computers, high quality images and virtually unlimited color choices.

I. INTRODUCTION

The year 1984 has been synonymous with the fundamental Orwellian pessimism of a future mired in technological alienation. Yet one year later, in 1985, we are celebrating the official maturity of an art form born just 20 years ago: computer art. These adjacent dates, one fictional, the other factual, share an intimate relationship with technological development in general and the capabilities of imaging machines in particular.

II. EARLY WORK AND THE FIRST COMPUTER ART SHOWS

Computer art represents a historical breakthrough in computer applications. For the first time computers became involved in an activity that had been the exclusive domain of humans: the act of creation. The number of Ph.D.'s involved emphasized the heavily academic nature of the art form.

The first computer art exhibitions, which ran almost concurrently in 1965 in the US and Germany, were held not by artists at all, but by scientists: Bela Julesz and A. Michael Noll at the Howard Wise Gallery, New York; and Georg Nees and Frieder Nake at Galerie Niedlich, Stuttgart, Germany.

Noll and Julesz conducted their visual research at Bell Laboratories in New Jersey. The Murray Hill lab became one of the hotbeds for the development of computer graphics. Also working there were Manfred Schroeder, Ken Knowlton, Leon Harmon, Frank Sinden and E.E. Zajac, who all belonged to the first generation of 'computer artists'. These scientists were motivated mainly by research related to visual phenomena: visualization of acoustics and the found- ations of binocular vision. Researchers at Bell contributed a wealth of information to spur the growth of computer graphics. Numerous computer animations were produced, mostly for educational purposes, but a few artistic experiments were also conducted. Julesz and Noll worked on the display of stereoscopic images. Noll developed the mathematics for N dimensional projections, as well as a 3D tactile-input device. Harmon and Knowlton devised automatic digitizing methods for images, work related to a project on sampling and plotting of voice data under the direction of Manfred Schroeder. Ken Knowlton contributed several graphics languages for animation. Throughout this article I will discuss the research conducted at Bell Labs in further detail [1-5].

The German center of activity was established at the Technische Universitat Stuttgart under the influence of philosopher Max Bense. Bense's main areas of research covered the history of science and the mathematics of aesthetics. He coined the terms 'artificial art' and 'generative aesthetics' in his main work, Aesthetica [6].

Advances in both computer music and poetry (or text processing) formed the context and also offered initial guidelines for computer art. Computer-generated texts were produced in Stuttgart beginning in 1960. An entire branch of text analysis relied heavily on computer-processed statistics dealing with vocabulary, length and type of sentences, etc. [7].

Electronic music studios predated computer art studios, leading a number of visual artists to seek information about computers from university music departments. Dutch artist Peter Struycken, for instance, took a course in electronic music offered by the composer G.M. Koenig at one of the few European centers, the Instituut voor Sonologie at the Rijks Universiteit at Utrecht [8].

Lejaren A. Hiller programmed the "Illiac Suite" in 1957 on the ILLIAC computer at the University of Illinois, Champaign, and composed the well-known "Computer Cantata" with Robert A. Baker in 1963 [9]. Some musicians who were using computers as a compositional tool also created graphics in an attempt to foster synergism between the arts (Iannas Xenakis, Herbert Briin, etc.)[10-19]. The filmmaker John Whitney, on the other hand, began structuring his computer animations according to harmonies of the musical scale and later called this concept Digital Harmony [20].

Only in a second phase did artists become involved and participate with scientists in three large-scale shows: "Cybernetic Serendipity," organized in London for the Institute of Contemporary Art by Jasia Reichard (1968), "Some More Beginnings," a show organized by Experiments in Art and Technology at the Brooklyn Museum, New York (1968), and "Software," curated by Jack Burnham at the Jewish Museum in New York (1970). The catalogs of these shows still represent some of the best overviews of emerging approaches to computers and other technologies for artistic purposes [21-24]. These shows presented the first results and publicly questioned the relationship of computers and art. They attracted many more artists to the growing field of computer art but did not succeed in making the art world in general more receptive to the new art form.

III. THE TECHNOLOGICAL ARTIST AND THE COMPUTER

When we look at the handful of early computer art aficionados, certain patterns emerge. All scientists and artists in this group belong to the same generation, born between the two World Wars, approximately 1925-1940. Their heritage is not bound by national borders; rather it is international, representing the highly industrialized countries of Europe, North America and Japan.

Initially, artists saw a very utilitarian advantage in using the computer as an accelerator for "high-speed visual thinking" [25].

Robert Mallary calls this the synergistic use of the computer in the context of man-machine interactions. He refers to the computer's "application as a tool for enhancing the on-the-spot creative power and productivity of the artist by accelerating and telescoping the creative process and by making available to its user a multitude of design options that otherwise might not occur to him" [26].

Because these artists were not interested in descriptive or elaborated painting, they could allow themselves to relate to the simple imagery generated by computers. Their interest was fueled by other capabilities of the computer, for instance its ability to allow the artist to be an omnipotent creator of a new universe with its own physical laws. Charles Csuri pointed out this far-reaching concept in an interview: "I can use a well-known physical law as a point of departure, and then, quite arbitrarily, I can change the numerical values, which essentially changes the reality. I can have light travel five times faster than the speed of light, and in a sense put myself in a position of creating my own personal science fiction" [27].

Many of the artists were constructivists. They were accustomed to arrang- ing form and color logically and voluntarily restricting themselves to a few well-defined image elements. They tried to focus on the act of seeing and perception by stripping away any notion of content. The French Groupe de Recherche d'Art Visuel, or GRAV, was a proponent of this direction and became instrumental in the Op Art and Kinetic Art movements of the 60's [28]. Vera Molnar, a cofounder of GRAV, con- ceived a "Machine Imaginaire" to enable her "to produce combinations of forms never seen before, either in nature or in museums, to create unimaginable images."

She realized that the computer could permit her "to go beyond the bounds of learning, cultural heritage, environment -in short, of the social thing, which we must consider to be our second nature" [29].

Conventional aesthetics and their social-psychological connotations were seen as a hindrance to creative visual research. It was precisely the computer's nonhumanness that was understood to free art from these influences. Art critics who pointed out the cool and mechanical look of the first results of computer art did not grasp the implications of this concept.

According to the Japanese artist Hiroshi Kawano, who started producing computer art in 1959 [30], human standards of aesthetics are not applicable to computer art. Instead the works generated by a computer require from the artist (or critic) "a rigorous stoicism against beauty." For Kawano, the computer artist's only function is to teach the computer how to make art by programming an algorithm. Thus the artist/programmer has become a "meta artist," and the executing artist could be the computer itself, the "art-computer" [31].

One artist who explicitly taught the computer is Harold Cohen. He wanted to automate the process of drawing, or, to be more precise, Cohen wanted to have a computer simulate his personal style of drawing. Cohen set out in 1973 to create an expert drawing system at Stanford University's Artificial Intelligence Laboratory. The computer was programmed to model the essence of Cohen's creative strategies. The program contained a large repertoire of various forms and shapes he had been using previously in his paintings. The other main component was a 'space-finder' to establish compositional relationships heuristically between forms on one lane. Well-defined rules and random number generators guaranteed the creation of never-ending variations of drawings with a very distinctive style [32, 33].

Cohen's project AARON is an early example of a functioning harmonic symbiosis between man and machine that enables the team to achieve a top performance. Increasingly sophisticated relationships between artists and com- puters have been classified by Robert Mallary in his article "Computer Sculpture: Six Levels of Cybernetics." He speculates that the computer could develop into an autonomous organism, capable of self-replication. Even if the machines could never actually be "alive," Mallary suggests their potential superiority. "The computer, while not alive in any organic sense, might just as well be, if it were to be judged solely on the basis of its capabilities and performances-which are so superlative that the sculptor, like a child, can only get in the way" [34].

But this prediction sounded like pure fantasy to those trying to enable the computer to assist the artist with very simple tasks. Especially in the beginning, interfacing with computers required artists to collaborate extensively with programmers.

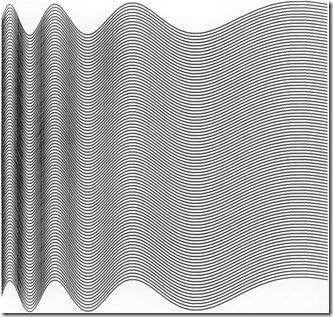

Fig. 1. A. Michael Noll, Bridget Riley's Painting "Currents", 1966. An early attempt at simulating an existing painting with a computer. Much of 'op art' uses repetitive patterns that usually can be expressed very simply in mathematical terms. These waveforms were generated as parallel sinusoids with linear increasing period and drawn on a microfilm plotter. A. Michael Noll also approximated Piet Mondrian's painting Composition with Lines statistically and created a digital version with pseudorandom numbers. Xerographic reproductions of both pictures were shown to 100 subjects, and the computer-generated picture was preferred by 59.

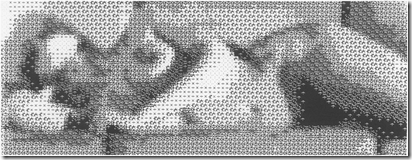

Fig. 2. Kenneth C. Knowlton and Leon Harmon, Studies in Perception I, 1966. Knowlton and Harmon made this picture at Bell Laboratories in Murray Hill, New Jersey. It is an early example of image processing and probably the first 'computer nude'. It was exhibited in the show "The Machine" at the Museum of Modern Art in New York in 1968. Scanning a photograph, they converted the analog voltages into binary numbers, which were stored on magnetic tape. Another program assigned typographic symbols to these numbers according to halftone densities. Thus the archetype of artistic topics, the nude, is represented by a microcosmos of electronic symbols printed by a microfilm plotter.

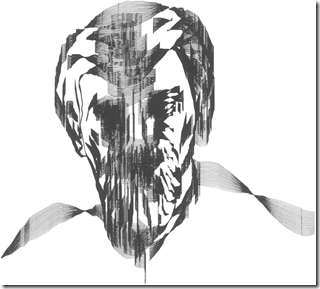

Fig. 3. Charles A. Csuri and James Shaffer, Sine Wave Man, 1967. This picture won first prize in Computers and Automation magazine's annual computer art competition in 1967. Initially the artist drew a human face and coded a handful of selected coordinates from the line drawing. This data served as fixed points for the application of Fourier transforms. The result was a number of sine curves with different slopes, although each shares the seed points from the drawing of the face.

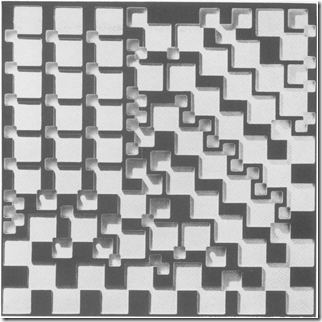

Fig. 4. George Nees, Sculpture, 1968. One of the earliest sculptures created completely under computer control. This piece was exhibited at the Biennale in Venice in 1969. Nees had a long-standing interest in the study of artificial visual complexity in connection with the chance-determination reaction. He programmed a Siemens 4004 computer to generate pseudorandom numbers, which were tightly controlled to determine width, length and depth of rectangular objects. The three-dimensional data were stored on magnetic tape and used to drive an automatic milling machine off line. The sculpture was cut from a block of wood.

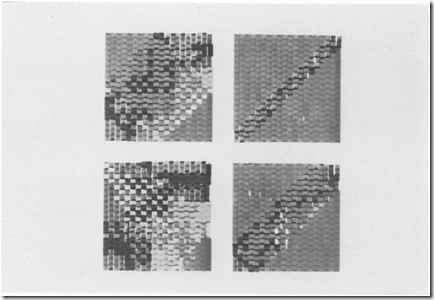

Fig. 5. Frieder Nake, Matrix Multiplications, 1967. These four pictures reflect the translation of a mathematical process into an aesthetic process. A square matrix was initially filled with numbers. The matrix was multiplied successively by itself, and the resulting new matrices were translated into images of predetermined intervals. Each number was assigned a visual sign with a particular form and color. These signs were placed in a raster according to the numeric values of the matrix. The images were computed on a AEG/Telefunken TR4 programmed in ALGOL 60 and were plotted with a ZUSE Graphomat Z64. A portfolio with 12 drawings was published and sold in 1967 by Edition Hansjoerg Mayer, Stuttgart, Germany.

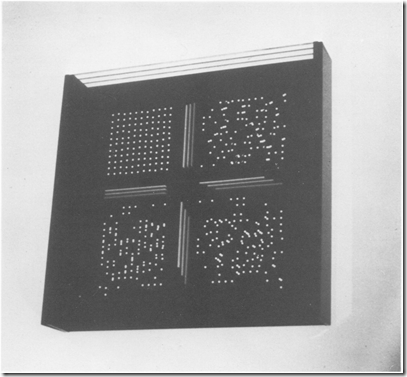

Fig. 6. Tony Longson, Quarter #5, 1977. As a sculptor Tony Longson got interested in the perception of space and how it is supported by perspective projection and parallax vision. Quarter #5 is the last of a series of pieces started in 1969. All are made of four sheets of Perspex mounted with small intervals on top of each other such that the viewer sees through all four layers. The complete image consists of an arrangement of dots in a rectangular grid. But this image has been decomposed randomly into four sections, so that only a subset of dots is engraved into each sheet of plastic. Viewers approaching the piece will see first an apparently chaotic distribution of dots. However, once the viewer is positioned in one of four fixed viewpoints, chaos will suddenly change into a highly structured order of dots in one of the quadrants [58].

IV. COLLABORATION OF ARTISTS AND SCIENTISTS/PROGRAMMERS/ ENGINEERS

Usually artists cooperated with scientists because only scientists could provide access to computers in industrial research labs and university computer centers. But artists needed the pro- gramming expertise of scientists even more. Some collaborations prospered over many years and led to successful achievements in custom-designed programs for artistic purposes [35]. Even so, Ken Knowlton described the different attitudes of artists and programmers as a major difficulty. In Knowlton's view artists are "illogical, intuitive, and impulsive." They needed programmers who were "constrained, logical, and precise" as translators and interfaces to the computers of the 1960s [36]. But the first of a growing breed of technological artists with hybrid capabilities started to appear, too. Manfred Mohr proudly declared that he was self- taught in computer science, Edvard Zajec learned programming and today teaches it to art students, and Duane Palyka holds degrees in both fine arts and mathematics.

V. THE FIRST GRAPHICS LANGUAGES

Early attempts at graphics languages fell into one of two categories. Either they were graphics subroutines implemented in one of the common programming languages and callable from them, or they were written in machine language and set up their own syntax and command set or vocabulary.

The first graphics extensions, G1, G2 and G3 by Georg Nees, for instance, were written in ALGOL 60 and contained only commands for pen control and random number generators [38].

More elaborate was Mezei's SPARTA, a system of Fortran calls incorporating graphics primitives (line, arc, rectangle, polygon, etc.), different pen attributes (dotted, connected, etc.), and transformations (move, size, rotate).

A further development led to ARTA, an interactive language based on light-pen control. ARTA also provided subrou- tines for key-frame interpolation, allow- ing both the interactive drawing of two key frames and the description of the type of interpolation with a function [39].

A language extension similar to Fortran was GRAF, written by Jack Citreon et al., at IBM; GRAF also offered optional light-pen input [40].

Even though these graphics extensions made programming in machine code superfluous, they still required programming in Fortran or ALGOL 60. In the second group of graphics languages we find Frieder Nake's COM- PART ER 56 as well as Ken Knowlton's languages, BEFLIX and EXPLOR. The names of these programming environ- ments are indicative of either function- or machine-dependent implementation of the language. COMPART ER 56, for instance, refers to a particular computer, the Standard Elektric ER 56, for which the language has been written. ER 56 contained three subpackages, a space organizer, a set of different random number generators, and selectors for the repertoire of graphic elements. Nake used COMPART ER 56 extensively to create more than 100 drawings [30].

BEFLIX (a corruption of Bell Flicks) was designed to produce animated movies on a Stromberg-Carlson 4020 microfilm recorder. Points within a 252- by-184 coordinate system could be controlled, each having one of eight different shades of gray. In contrast with today's frame buffers, which hold the image memory in their bit map, BEFLIX's images resided in the computer's main memory.

As an animation language it provided instructions for several motion effects as well as for camera control. Knowlton had initially hoped that artists would learn the language to program their own movies, but he came to realize that they usually wanted to create something the language could not facilitate, and they also shied away from programming. Therefore he accommo- dated the artists by, for example, writing special extensions to BEFLIX for Stan Vanderbeek, or creating a completely new language, EXPLOR (images from EXplicit Patterns, Local Operations and Randomness), for Lillian Schwarz [41].

None of the graphics languages men- tioned received widespread use, partly because their implementation was mach- ine dependent and also because each language was restricted in scope. The tools were useful for their inventors' goals but lacked sufficient flexibility and ease of use to accommodate the creative ideas of many different artists. BEFLIX, however, was installed in several art departments and provided the helping hand many programmers had given when collaborating individually with artists.

At Ohio State University Charles Csuri directed, over many years, the develop- ment of several graphics languages, all designed for ease of use, interactive control, and animation capabilities. One incarnation, GRASS, or GRAphics Symbiosis System, was designed by Tom DeFanti, first for a real-time vector display and later as ZGRASS for a Z80- based animation language for artists. This language has been widely used by programming artists, which indicates that it possesses sufficient generality to support different imaging strategies as well as suitable command and execution structures to adapt to artistic creation [44, 45].

VI. THE FIRST GRAPHICS SYSTEMS

Let's turn the clock back again to the 1960s when the microcomputer had not even been conceived. The computers used initially were large mainframes, soon followed by minicomputers. Such equipment cost anywhere from $100,000 to several million dollars. All these machines required air conditioning and therefore were located in separate com- puter rooms, which served as fairly uninhabitable 'studios'. Programs and data had to be prepared with the keypunch; then the punch cards were fed into the computer, which ran in batch mode. In general, the systems were not interactive and could produce only still images.

Pen plotters, microfilm plotters and line printers produced most of the visual output. The first animations were created by plotting all still frames of the movie sequentially on a stack of paper or microfilm. Motion could only be re- viewed after these stills had been transferred to 16-mm film and projected. Only a few artists had the opportunity to use even more expensive vector displays introduced in the late 1960s by companies such as IBM or newly founded graphics manufacturers such as Evans & Suther- land, Vector General, and Adage, whose displays cost between $50,000 and $100,000. These displays featured very high addressability, up to 4000 by 4000 points, and could update coordinates fast enough to support real-time animation of wireframe models in 3-D [46].

One of the first computer art shows incorporating these interactive displays took place at the College of the Arts at Ohio State University in 1970 [47].

A more popular and cost-effective choice was the storage display tubes offered by Tektronix, starting around $10,000. But even if only a single line was to be changed, the picture had to be erased completely and redrawn.

With the exception of the microfilm plotter, all output devices were line or vector oriented and thus characterized the majority of early computer art. The microfilm plotter is something of a hybrid between a vector CRT and a raster image device [48].

The CRT beam scans the screen sequentially, turning on and off under computer control. A camera mounted on the CRT makes a time exposure during each scan. Thus the resulting image looks like a photograph taken from a raster device. Some artists used the alphanumeric characters of the line printer to produce shaded areas by overstriking one position with different characters or using the capacity of the eye to integrate these separate microimages into one larger macroimage or supersign. The German artist Klaus Basset attained extraordinarily subtle shading effects by using a simple typewriter. His work clearly relates to computer art, even if he did not employ a computer directly to control the writing process. He programmed himself, so to speak, to execute precisely designed algorithms with a mechanical drawing device [49].

Output was often taken directly from the machine and exhibited. Moreover, the signatures were sometimes plotted by the machine as part of the drawing program. Later, artists used these graphics as sketches for manually produced paintings or copy for photographically transferred serigraphs. Artists also followed tradition and signed their computer-generated work by hand. Color was often introduced only in this later phase ofpostproduction, or a limited range could be achieved by using different plotter pens.

Harold Cohen realized this limitation of the available graphics equipment. His approach was to delegate only the job of drawing to the computer, for which he used a plotter, a CRT, or the funny- looking 'turtle'-a remotely controlled drawing vehicle. He colored the resulting line drawings later by hand, thus mixing automatic drawing and human painting styles. He recently published a series of his computer-generated line drawings in The First Artificial Intelligence Coloring Book as an invitation to everyone to combine their creative efforts with those of the computer [50].

Methods of entering graphics were even more restricted. With hardly any interactive means of controlling the computer, artists had to rely on programs and predefined data. (The technology of light pens and data tablets had already developed, but these input devices were not widely available.) Once the data was fed into the computer, there was no more creative invention. Therefore the design process took place exclusively in the conceptualization prior to running a program. It was at least a decade before Ivan Sutherland's interactive concepts, demonstrated in 1963 with "Sketchpad"[51], resulted in a breakthrough and the ultimate proliferation of paint systems that enabled the artist to draw directly into the computer's memory. Today paint systems, sophisticated 3-D modeling software, and video input provide quick ways to create complex images, but the first generation of computer artists had to focus on logic and mathematics-in short, rather abstract methods. To some degree this restriction brought about creative concepts derived directly from computer technology itself.

VII. THE CONCEPTS

The pioneers of computer art were driven by the newness of the technology, the untouched areas wide open for inventive investigation. Because of the lack of viable commercial applications at that time, they enjoyed the rare freedom to define their own goals, guided only by personal motivation and intuition. This small group of believers had a vision, which has not yet been fulfilled. These artists felt challenged to come up with sophisticated artistic and intellectual concepts to offset the crude computer graphics machines of the mid-1960s with their lack of color, speed and interactivity. It might be fruitful to resolve with today's technology the paradox Manfred Mohr found in his work, a paradox particularly applicable to the early days of computer art: "The paradox of my generative work is that formwise it is minimalist and contentwise it is maximalist" [52].

VIII. COMBINATORICS

The computer was thought of by Nake as a "Universal Picture Generator" capable of creating every possible picture out of a combination of available picture elements and colors [30].

Obviously, a systematic application of the mathematics of combinatorics would lead to an inconceivable number of pictures, both good and bad, and would require an infinite production time in human terms, even if exactly computable. This raised the issue of preselecting a few elements that could be explored exhaustively and presented in a series or cluster of subimages as one piece. Manfred Mohr, for instance, centered his work on the cube and concisely devised successive transformations that modified an ordinary cube. The complex set of possible transformations was then plotted, and the transformations were displayed simultaneously as a single image. A series of catalogs of his work from 1973 to present exquisitely documents the consistent progression of his visual logic [52].

IX. ORDER, CHAOS, AND RANDOMNESS

Other artists chose to investigate the full range between order and chaos, employing random number generators. In this way they could create many different images from one program, introducing change with the random selection of certain parameters to define, for instance, location, type or size of a graphic element [8, 29].

Random numbers served to break the predictability of the computer. They simulated intuition in a very limited fashion and helped overcome the severe restrictions of human interaction with the computer. Random numbers could be constrained within a limited numeric range and then applied to a set of rules of aesthetic relationships. If these rules were derived from an analysis of traditional paintings, the program could simulate a number of similar designs, according to Noll [53] and Nake [30].

Or the artist could set up new rules for generating entire families of new aesthetic configurations, using random numbers to decide where and how to place graphic elements. Peter Struycken recovered the rigorous tradition of the art movement de Stijl and consciously disregarded even abstract forms by focusing exclusively on pure color. In his own words: "Form is an easier conceptual representation and repetition than color. Form can almost always be associated with a form that is already known. How easy it is to connect abstract forms to reality: this is just like a cloud, that like a snake, these like flowers. Form is then regarded as something in itself, where recognition is as important as seeing as such" [8].

To discourage even the faintest notion of content, he reduced the image in "Plons" (Dutch for Splash) to simple squares forming a coordinate system. The computer calculated propagation of color energy emanating from an arbitrary point of initial impact. The changes of color distribution were presented in numerical codes, which the artist translated into actual color paint- ings by hand.

X. MATHEMATIC FUNCTIONS

Numeric evaluations of functions could be plotted directly. Graphs of different functions could be merged, or their points could be connected. These methods relate directly to experiments with analog computing machines by Ben F. Laposky and Herbert W. Franke in the 1950s. They constructed their own imaging systems based on an arrangement of voltage-controlled oscillators. The voltages deflected the beam of oscilloscopes to produce electronic line drawings. Laposky called his images accordingly 'oscillons'. They were photographic time exposures of the CRT display [54].

Entire number fields were drawn with digital computers, which features a control superior to that of analog systems. For instance, artist/scientists would display modular relationships or particular properties such as primeness or various stages of a matrix multiplication approaching its limiting boundaries. The use of mathematics does not necessarily imply a highly geometrical result. Some scientists tried to model irregular patterns. Knowlton, for example, simulated crystal growth, and Manfred R. Schroeder visualized equations describing noise in phone lines. Both experiments relate to the mathematics of fractals so prevalent today for the modeling of natural phenomena [55].

XI. REPRESENTATIONAL IMAGES

Whereas the last type of image visualizes the mathematical behavior of numbers, the numbers could also repre- sent the coordinates of a hand drawing. This data had to be meticulously entered into the computer via punch cards; then various processing methods could be applied to the image data. Leslie Mezei deformed the image progressively into complete noise [56].

Charles Csuri and James Shaffer applied Fourier transforms to a subset of the data samples and generated complex sine functions through those fixed points. The originally digitized drawing, combined with several sine waves, formed the final image of the "Sine Wave Man". The Japanese Computer Graphics Technique Group experimented with the metamorphosis of one image into a completely different one. Thus a realistic face could be completely distorted or gradually transformed into a geometric entity such as a square. The interpolation techniques they were using for the creation of a simple image became the cornerstone of animation.

This animation technique, called key-frame animation, was pioneered at the National Film Board of Canada by Burtnyk and Marcelli Wein [57].

Peter Foldes used interpolation techniques successfully as the major stylistic method in his movie Hunger. Optical scanners automated the task of entering visual data into the computer and in effect revealed the potential of machine vision. These images were stored in the computer in the form of different numbers to encode different gray values, and later color. They could be printed with plotters, using a printing concept similar to that used for halftones, where dots of different sizes and densities are employed.

The artist could relate the gray values of an image to a set of arbitrary visual symbols and print out the converted images. Thus images were created like the nude by Knowlton and Harmon, which on closer inspection is seen to consist of numerous electrical symbols, or an eye whose close-up reveals letters forming the sentence "One picture is worth a thousand words" (Schroeder).

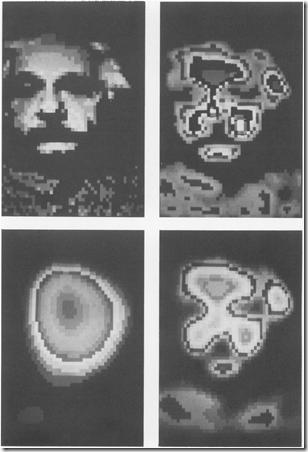

Fig. 7. Herbert W. Franke, Portrait Albert Einstein, 1973. A photograph of Einstein was scanned and digitized optically. The data was stored on tickertape and displayed on a CRT using programs developed for applications in medical diagnosis. Herbert Franke generated the colors with random numbers and by smoothing contour lines. This series of portraits gradually becomes more abstract and thus fuses visually Einstein's portrait and a nuclear blob.

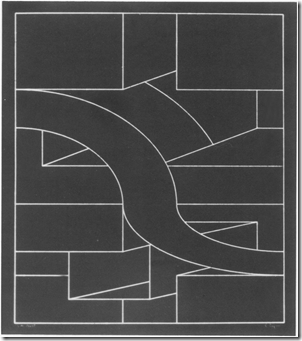

Fig. 8. Edvard Zajec, Prostor, 1968-69. One of a series of drawings from the Prostor program. Zajec was concerned with establishing a design system that could generate a multitude of variations. Each time the program runs, it defines a rectangle and subdivides it according to harmonic proportions. The figure formation inside this composition takes place by selecting successively a line from this set: vertical, horizontal, diagonal and sinusoidal. The parameters for length and amplitude comply with harmonic ratios. The lines connect with each other according to predetermined rules [37].

Fig.9. Manfred Mohr.P159 A,1973. The illussion of a three-dimensional cube is evoked by projecting a set of 12 straigh lines onto the two-dimensional drawing plane. Mohr dissolves the three-dimensional by taking away edges(lines) of the cube consecutively and observes the appearance of new, two-dimensional icons. Inaddition, he introduces rotations and other transformations of the cube to foster visual ambiguity and instability. The dynamics of this process and its visual invention are explored systematically, and each result is drawn as part of a cluster of images representing the complete set of combinations.

Fig. 10. Colette and Charles Bangert, Landlines, 1970. This couple has been collaborating on their computer art for almost two decades. In parallel Colette has continued to draw her art by hand. The investigation of similarities and differences between 'hand work' and computer plots is the foundation of their creative concepts, and findings are fed back into new programs and more sophisticated hand drawings. This methodological approach is tightly coupled with the subject matter of all their work: landscapes. Colette reports: "The elements of both the computer work and my hand work are often repetitive, like leaves, trees, grass and other landscape elements are. There is sameness and similarity, yet everything is changing" [59].

Fig. 11. Klaus Basset, Kubus, 1974. The typewriter graphics are composed of only five signs: 'I', 'O', 'o', 'H' and '%'. By overstriking these type symbols in various combinations, Basset achieved a range of halftones from bright to dark. He used these tones to shade cubic objects, which he calculated and meticulously typed by hand. Recently, Basset started to use a computer with a line printer as an output device. He claims that the typewritten pieces are more precise

Fig. 12. Sonia Landy Sheridan, Scientist's Hand at 3M, 1976. This educational masterpiece implicitly pays homage to the major utensil of early programming: the punch card. The image of the hand has been transferred onto the top line of a stack of punch cards by an electrostatic process using a prototype of 3M'S VQC photocopier. Sheridan was trying to show the children of the scientists at 3M's central research labs in St Paul, Minnesota, how the computer stores information on cards and how the image on the cards can be manipulated without even using a machine. This hand can be stretched millions of ways by merely shifting the cards [42, 43].

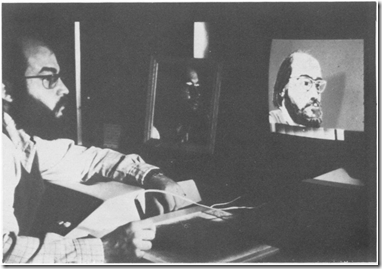

Fig. 13. Duane Palyka, Painting Sdf-Portrait, 1975. (Photo: Mike Milochek). The artist painted a self-portrait on a computer at the University of Utah Computer Science Department in 1975. In the picture, Palyka is using a simple paint program called Crayon, written in Fortran by Jim Blinn. The program ran under the DOS operatingsystem on a DEC PDP-11/45 using the first Evans & Sutherland frame buffer.

XII. THE END OF AN ERA

The end of the first decade of computer art coincided with important changes due mainly to three technological advances:

1. The invention of the micro- processor changed the size, price, and accessibility of computers dramatically. The computer could become a truly personal tool.

2. Interactive systems became common in the creative process. Traditional paradigms of artistic creation such as painting and drawing, photographing and vid-eotaping could be simulated on the computer.

3. Raster graphics displays increas- ed the complexity of imagery significantly. Bit-mapped image memories allowed virtually un- limited choice of color an thus s upported the creation of smoothly shaded or textured three-dimensional images.

These three advances combined to foster the migration of computer technology into art schools and artists' studios as well as commercial production houses. The intimate collaboration between artists and scientists was no longer required. Supported by the emergence of user-friendly general-purpose and high- level graphics language, artists became computer literate, or they bought soft- ware off shelves stocked by a burgeoning computer graphics industry.

Acknowledgements-The following artists and scientists provided valuable information and visuals for this article. Thank you very much Colette and Charles Bangert, Klaus Basset, Jack Burnham, Harold Cohen, Charles A. Csuri, Herbert W. Franke, Kenneth C. Knowlton, Ben F. Laposky, Tony Longson, Robert Mallary, Aaron Marcus, Manfred Mohr, Vera Molnar, Zsuzsa Molnar, Frieder Nake, Georg Nees, A. Michael Noll, Duane Palyka, Manfred R. Schroeder, Lillian Schwartz, Sonia Landy Sheridan, Peter Struycken and Edvard Zajec.

REFERENCES

1. K.C. Knowlton, "A Computer Tech- nique for Producing Animated Movies", Proc. AFIPS Conf., No. 25, Spring 1964, pp. 67-87.

2. K.C. Knowlton, "Computer Animated Movies", Emerging Concepts in Com- puter Graphics, D. Secrest and I. Nievergelt, eds., Benjamin/Cummings Publishing Corp., New York-Amster- dam, 1968, pp. 343-369.

3. E.E. Zajac, "Film Animation by Com- puter", New Scientist, No. 29, pp. 346-349 (1966).

4. A.M. Noll, "Computers and the Visual Arts", Design and Planning, No. 2, M. Krampen and P. Seitz, eds., Hastings House Publishers, Inc., New York, 1967, pp. 65-79.

5. A.M. Noll, "The Digital Computer as a Creative Medium", IEEE Spectrum 4, 89-95 (1967).

6. M. Bense, Aesthetica. Einfuhrung in die Neue Aesthetik, Agis Verlag Baden- Baden, 1965.

7. W. Fucks, Nach Allen Regein der Krust, Deutsche Verlags Anstalt, Stuttgart, 1968.

8. P. Struycken, Structuur-Elementen 1969-1980, catalog. Museum Boymans- van Beuningen, Rotterdam, 1980, translated from Dutch by Bert Speelpennig, p. 8.

9. Isaacson L. Hiller, Experimental Music. McGraw-Hill Book Co., New York, 1959.

10. J. Burnham, Beyond Modern Sculpture. George Braziller, Inc., New York, 1968.

11. D. Davis, Art and the Future. Praeger Publishers, New York, 1973.

12. H.W. Franke, Computer Graphics, Com- puter Art. Phaidon, New York, 1971.

13. S. Kranz, Science and Technology in the Arts. Van Nostrand-Reinhold, New York, 1974.

14. F. Malina, ed., Kinetic Art: Theory and Practice. Selections from the Journal Leonardo. Dover Publications, New York, 1974.

15. F. Malina, ed., Visual Art, Mathematics and Computers. Pergamon Press, Elms- ford, N.Y., 1979.

16. T. Nelson, Dream Machines-Computer Lib. The Distributors, South Bend, Ind., 1974.

17. J. Reichardt, ed., Cybernetics, Art and Ideas. New York Graphic Society, Greenwich, Conn., 1971.

18. R. Russett and C. Starr, Experimental Animation. Van Nostrand-Reinhold, New York, 1976.

19. G. Youngblood, Expanded Cinema. E.P. Dutton & Co., New York, 1970.

20. J. Whitney, Digital Harmony. BYTE Pubs., Peterborough, N.H., 1980.

21. J. Reichardt, ed., "Cybernetic Serendipity: The Computer and the Arts", Studio International Special Issue, London and New York, 1968.

22. B. Kliiver, J. Martin, and R. Rauschen- berg, eds., Some More Beginnings: An Exhibition of Submitted Works Involving Technical Materials and Processes. Brooklyn Museum and the Museum of Modern Art, Experiments in Art and Technology, New York, 1968.

23. J. Burnham, ed., Software: Information Technology: Its New Meaningfor Art. The Jewish Museum, New York, 1970.

24. F. Popper et al., eds., Electra: L'elec- tricite et l'electronic dans 'art au XXe siecle., Mus6e d'Art Moderne de la Ville de Paris, Paris, 1983.

25. M. Mohr, "Artist's Statement", The Computer and its Influence on Art and Design. J.R. Lipsky, ed., catalog, Shel- don Memorial Art Gallery, Univ. of Nebraska, Lincoln, Spring 1983.

26. R. Mallary, "Statement", Computer Art: Hardware and Software Vs. Aesthetics. Coordinators: Charles and Colette Bangert, Proc. Seventh National Sculp- ture Conf., Univ. of Kansas Press, Lawrence, Apr. 1972, pp. 184-189.

27. A. Efland, "An Interview with Charles Csuri", Reichardt, Cybernetic Serendip- ity, p. 84.

28. Groupe de Recherche d'Art Visuel, Proposition sur le Mouvement. Catalog, Galerie Denise Rene, Paris, Jan. 1961.

29. V. Molnar, "Artist's Statement", Page #43, pp. 26-27 (1980).

30. F. Nake, A'sthetik als Informationsverar- beitung, Springer Verlag, Wien-New York, 1974.

31. H. Kawano, "What Is Computer Art?" Artist and Computer, R. Leavitt, ed., Creative Computing, Morristown, N.J., 1976, pp. 112-113.

32. H. Cohen, What Is An Image? IJCAI 6, pp. 1028-1057.

33. H. Cohen, "How to Make a Drawing", Science Colloquium. Nat'l Bur. of Stds., Wash. D.C., Dec. 17, 1982.

34. R. Mallary, "Computer Sculpture: Six Levels of Cybernetics", Artforum, May 1969, pp. 29-35.

35. Colette Bangert and Charles Bangert, "Experiences in Making Drawings by Computer and by Hand", Leonardo 7, 289-296 (1974).

36. K.C. Knowlton, "Statement", Computer Art. Hardware and Software Vs. Aesthet- ics. Coordinators: Charles and Colette Bangert, Proc. Seventh National Sculp- ture Conf., Univ. of Kansas Press, Lawrence, Apr. 1972, pp. 183-184.

37. E. Zajec, "Computer Art: The Binary System for Producing Geometrical Non- figurative Pictures", Leonardo 11, 13-21 (1978).

38. G. Nees, Generative Computergraphik, Siemens AG, Berlin-Munich, 1969.

39. L. Mezei, "SPARTA: A Procedure Oriented Programming Language for the Manipulation of Arbitrary Line Draw- ings", Information Processing 68, Proc. IFIP Congress 69, North-Holland, Ams- terdam, pp. 597-604.

40. J.P. Citroen and J.H. Whitney, "CAMP-Computer Assisted Movie Production", AFIPS Conf. Proc. 33, 1299-1305.

41. K.C. Knowlton, "Collaborations with Artists-A Programmer's Reflection", F. Nake and A. Rosenfeld, eds., Graphic Languages, North-Holland, Amsterdam, 1972, pp. 399-416.

42. Sonia Landy Sheridan, Energized Art- science. Catalog, Museum of Science and Industry, Chicago, 1978.

43. D. Kirkpatrick, "Sonia Landy Sheridan", Woman's Art J. 1, 56-59 (1980).

44. T. DeFanti, "The Digital Component of the Circle Graphics Habitat", Proc. NCC 195-203 (1976).

45. T. DeFanti, "Language Control for Easy Electronic Visualization", BYTE 90-106 (1980).

46. I.E. Sutherland, "Computer Displays", Scientific American June 1970.

47. C. Csuri, ed., Interactive Sound and Visual Systems. Catalog, College of the Arts, Ohio State University, Columbus, 1970.

48. R. Baecker, "Digital Video Displays and Dynamic Graphics", Computer Graphics (Proc. SIGGRAPH 79), 13,48-56 (1979).

49. K. Basset, "Schreibmaschinengrafik", H.W. Franke and G. Jiger, eds., Apparative Kunst. Vom Kaleidoskop zum Computer, DuMont Schauberg, Cologne, 1973, pp. 168-171.

50. H. Cohen et al., eds., The First Artificial Intelligence Coloring Book. Kaufmann, Menlo Park, Calif., 1984.

'만들기 / Programming > research' 카테고리의 다른 글

| About Fractal (1) | 2008.10.14 |

|---|---|

| 프랙탈 아트에 관하여 (0) | 2008.10.14 |

| Color histogram (0) | 2007.07.31 |

| BRDF (0) | 2007.06.28 |

| Eigenvalue, eigenvector and eigenspace (0) | 2007.04.20 |